[ nodar sdk ]

Software Development Kit

Download. Integrate. Deploy.

Build high-performance 3D perception solutions in minutes with NODAR's production-ready stereo engine and your own cameras.

Download Your Free 14-Day Trial

Download Your Free 14-Day Trial

State of the art automotive lidar vs NODAR SDK

State of the art automotive lidar vs NODAR SDK

State of the art automotive lidar vs NODAR SDK

Choose a license or download a free trial and start integrating today.

Production pricing available — with licensing starting as low as $150/stereo camera.

What's Included

Patented calibration and stereo matching technology for unparalleled accuracy and long-range stereo camera sensing

Occupancy map and object detection GridDetect software (optional)

Precompiled Hammerhead SDK for x86 and ARM

NODAR Viewer for depth and point-cloud visualization

C++ and Python APIs

Full developer documentation

Superhuman vision.

Unmatched precision.

Superhuman vision.

Unmatched precision.

Superhuman vision.

Unmatched precision.

The NODAR SDK transforms any pair of cameras into a high-performance 3D sensor for autonomous perception with industry-leading range, resolution, and reliability.

NODAR transforms any pair of cameras into a high-performance 3D sensor for autonomous perception with industry-leading range, resolution, and reliability.

The NODAR SDK transforms any pair of cameras into a high-performance 3D sensor for autonomous perception with industry-leading range, resolution, and reliability.

Inputs

Your Existing Cameras

Left Image

Right Image

NODAR SDK

Autocalibration

Compensating for camera vibrations every frame with subpixel accuracy

Stereo Matching

Geometric calculation of depth for every pixel

Occupancy Mapping

Particle filter and fusion layer

Your Computer

(NVIDIA Orin, x86+NVIDIA GPU)

Outputs

Point Cloud (RGBXYZ)

Depth Maps

Point Cloud (RGBXYZ)

Velocity

Recitifed Images

Object List

Occupancy Map

Display your outputs in real time

Download

Integrate

Deploy

Developer Resources

All the resources, tools, and support needed to integrate our cutting-edge stereo vision software into your hardware solutions. Whether you’re building autonomous systems or innovative imaging products, we’re here to help you create the future.

Testimonials

If someone showed us a lidar with such performance, it would be a no-brainer to move forward with integration.

Global Tractor Supplier

In a head-to-head trial with all other multi-camera competitors, NODAR provides the most accurate and only real-time wide-baseline stereo vision system. All other systems compared to NODAR were post-processed due to the high computational load.

Global Automotive OEM

We have been working on stereo for decades and your system is impressive.

Global Automotive Tier 1 Supplier

Applications

Trucks

Ultra long-range, small object detection - detect a 25cm object at 305m to allow for time to stop or avoid.

Passenger Cars

Precise long-range object detection for L3 and above.

Air Mobility

Long-range detection in any direction improves safety.

Heavy Equipment

Resilience to dust and high-vibration, and best-in-class performance in low visibility.

Ferry

Robustness with moving horizon and ultra-fine precision at short distances for docking.

Rail

1000m+ range obstruction detection for collision avoidance and mapping.

Last Mile Delivery

Low-cost, flexible camera mounting, low compute and fine precision - perfect for mobile robots.

Robotaxi

Sensor fusion with lidar provides redundancy while enabling safe highway driving.

Agriculture

Resilience to dust and vibration for autonomous operation, and precision for spout alignment.

NODAR SDK Benefits

The NODAR SDK provides accurate, reliable depth perception in real-world conditions including dust, low light, rain, and fog. Powered by patented auto-calibration algorithms, it transforms stereo image inputs from existing cameras into consistent, high-resolution depth data with minimal setup, enabling scalable, cost-effective integration across diverse platforms and use cases.

Accuracy and Range

High accuracy by computing the true depth of every pixel based on stereoscopic parallax rather than crudely interpolating from known objects.

Low Visibility Performance

High performance in difficult conditions such as high-dust, low-light, rain, fog, and direct sunlight.

Consistency of Results

Patented auto-calibration technology actively compensates for camera perturbations due to wind, road shock, vibration, and temperature.

Ruggedness and Reliability

High longer-term reliability due to continuous calibration.

Easy Installation

Quick setup with a single software installer and thorough documentation.

Value

No need for expensive new hardware - the SDK can be used with existing cameras.

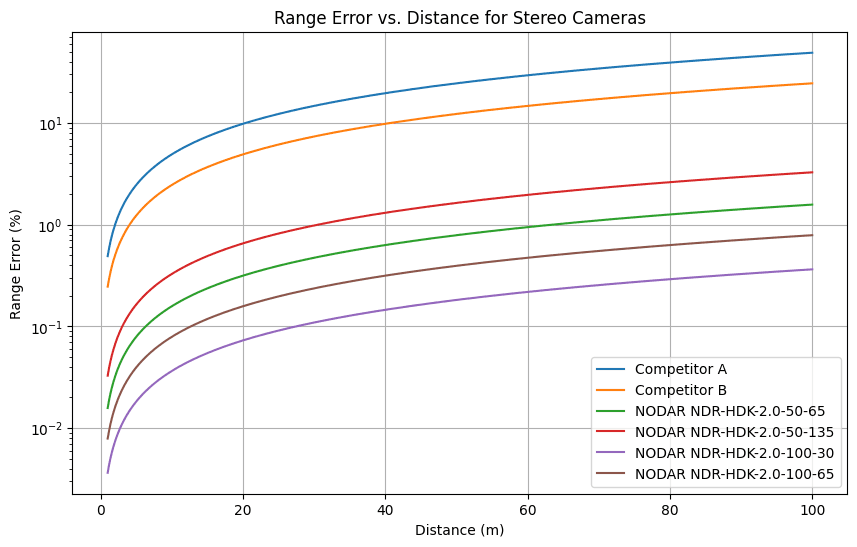

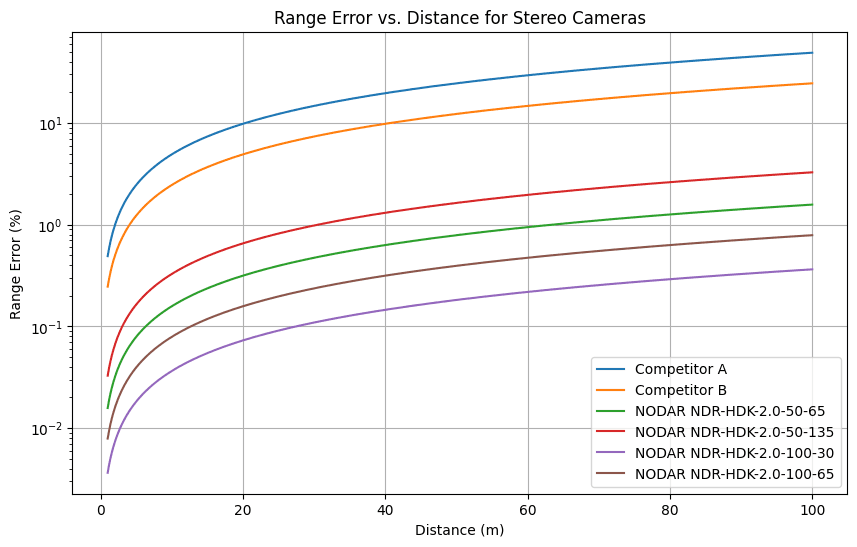

Competitive Analysis

How does NODAR compare to other stereo vision options?

Options that work with generic cameras (not pre-built stereo vision boards with fixed baseline, low-performance global shutters, low resolution, or low-quality optics). There are some free options, but remeber that you get what you pay for!

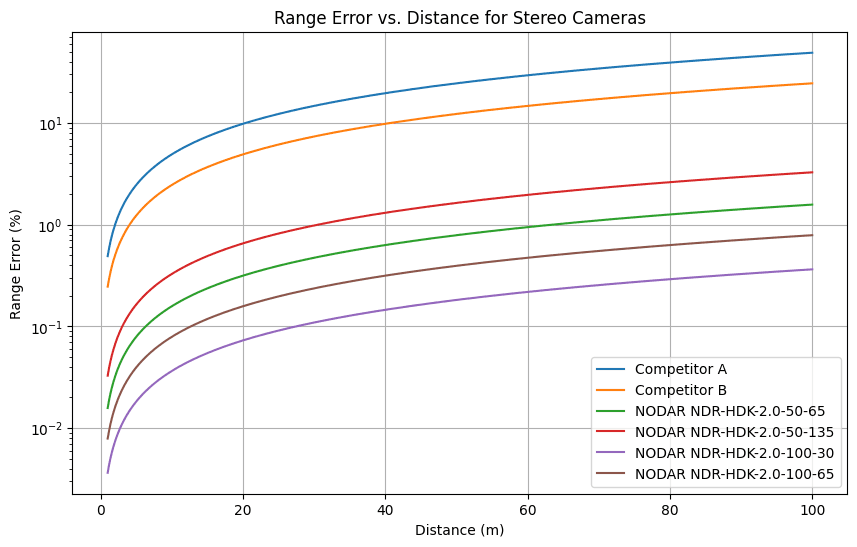

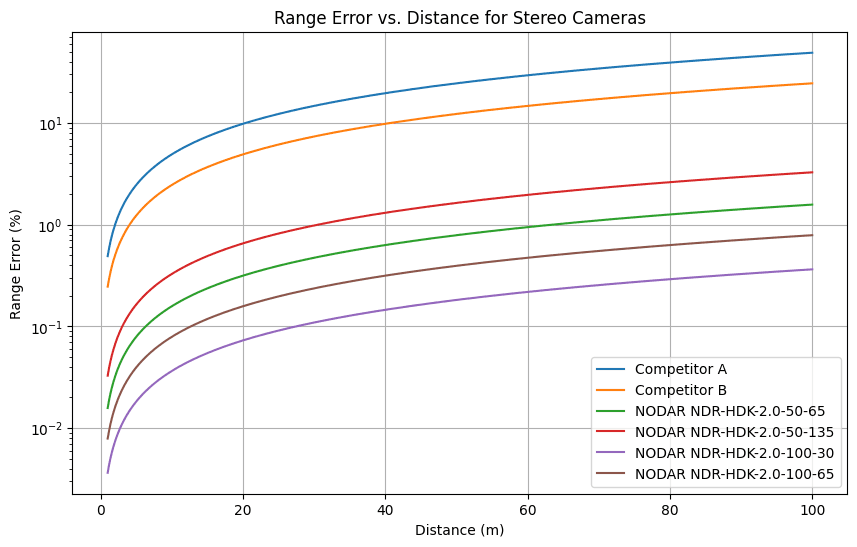

Accuracy

NODAR is orders of magnitude more accurate than any other commercial stereo vision camera.

Competitor A: 50-mm baseline, 90-deg FOV, 1280 pixels (H)

Competitor B: 100-mm baseline, 90-deg FOV, 1280 pixels (H)

NODAR NDR-HDK-2.0-50-65: 500-mm baseline, 65-deg FOV, 2880 pixels (H)

NODAR NDR-HDK-2.0-50-135: 500-mm baseline, 135-deg FOV, 2880 pixels (H)

NODAR NDR-HDK-2.0-100-30: 1000-mm baseline, 30-deg FOV, 2880 pixels (H)

NODAR NDR-HDK-2.0-100-65: 1000-mm baseline, 65-deg FOV, 2880 pixels (H)

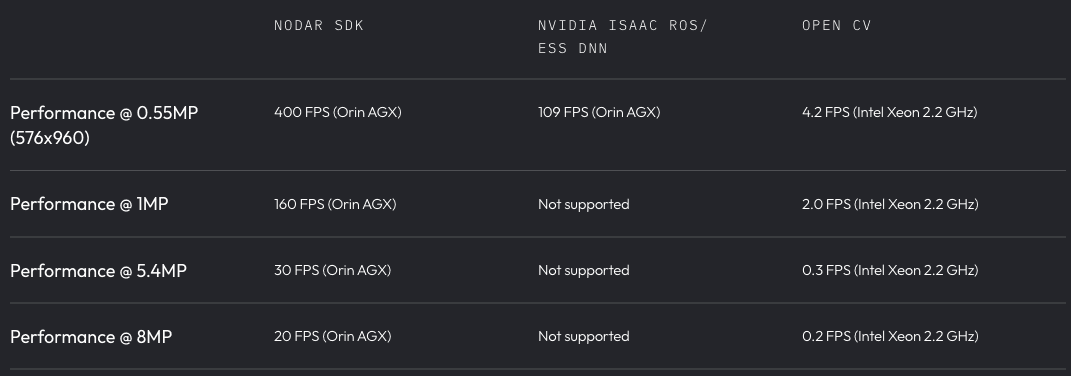

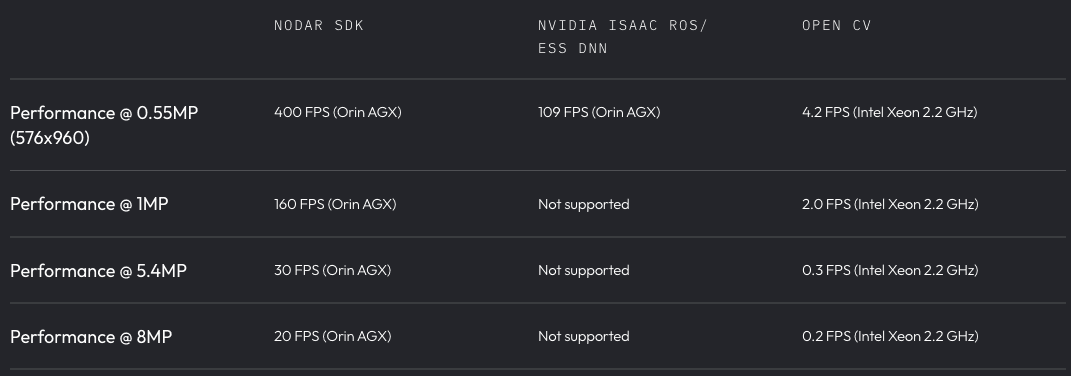

Speed

NODAR Hammerhead can process 160M points/s, which is 4-100x faster than other options.

Price

Replace your LiDAR with NODAR and cut sensor costs by 80%. Current LiDAR ($500–$15,000) makes mass-market margins impossible. At $1,000 per unit, autonomy is a luxury experiment. At $150, it’s a global standard.

Competitive Analysis

How does NODAR compare to other stereo vision options?

Options that work with generic cameras (not pre-built stereo vision boards with fixed baseline, low-performance global shutters, low resolution, or low-quality optics). There are some free options, but remeber that you get what you pay for!

NODAR SDK

NVIDIA Isaac ROS/ESS DNN

open cv

License

Commercial

Commercial

Open Source

Primary Use

AV, ADAS, Trucks, Rail, Tractors, Airplanes

Robotics & Warehouse Automation

DIY, Prototyping, Research

Max Detecction Range

1,000m+

Short to Mid

(0.5m – 50m)

Baseline Dependent (Limited)

Frame-byFrame Auto Calibration

Yes

Adjusts every frame to

fix vibration/shocks

No

Relies on rigid mounts; DNN is robust to some error but doesn't "re-calibrate"

No

Requires manual chessboard or offline calibration

Hardware Agnostic?

Yes (Off-the-shelf cameras)

Partially (Optimized for Jetson/NVIDIA)

Yes

Supported Computers

CPU: ARM or x86 GPU: Nvidia

Nvidia Jetson

Mainly x86 support

Core Algorithm

Wide-baseline

Geometry

Deep Learning

(ESS DNN)

SGBM

Accuracy

Excellent

Good

Fair

Computational Speed

Fastest

Middle

Slowest

ROS Support

Yes

Yes

No, not natively

ZMQ Support

Yes

No

No

Vibratation Tolerance

Extreme. Supports unlinked, non-rigid mounts.

Low. Expects a rigid bridge or factory-sealed unit.

None. Any shift breaks the epipolar geometry.

Speed

NODAR Hammerhead can process 160M points/s, which is 4-100x faster than other options.

NODAR SDK

NVIDIA Isaac ROS/ESS DNN

open cv

Performance @ 0.55MP (576x960)

400 FPS (Orin AGX)

109 FPS (Orin AGX)

4.2 FPS (Intel Xeon 2.2 GHz)

Performance @ 1MP

160 FPS (Orin AGX)

Not supported

2.0 FPS (Intel Xeon 2.2 GHz)

Performance @ 5.4MP

30 FPS (Orin AGX)

Not supported

0.3 FPS (Intel Xeon 2.2 GHz)

Performance @ 8MP

20 FPS (Orin AGX)

Not supported

0.2 FPS (Intel Xeon 2.2 GHz)

Includes benchmarks for OpenCV computations.

Price

Replace your LiDAR with NODAR and cut sensor costs by 80%. Current LiDAR ($500–$15,000) makes mass-market margins impossible. At $1,000 per unit, autonomy is a luxury experiment. At $150, it’s a global standard.

NODAR SDK

Automotive LiDAR (Long-Range)

High-End LiDAR (Robotaxi)

Hardware Cost

Low

($40 for 2x 8MP cameras, 100K qty)

Moderate

($500–$1,000)

High

($5,000–$15,000+)

Software/Licensing

Per-Unit (license fee)

Included with hardware

Included with hardware

Unit Cost (Qty 1M)

$190

$600–$1,200

$10,000+

Data Density

~100 Million points/sec

~1–2 Million points/sec

~2–5 Million points/sec

Maintenance

Low (no moving parts)

Moderate (solid-state is better)

High (mechanical spin wears out)

Integration Cost

Moderate (requires calibration/GPU)

High (calibration + power draw)

Extreme (massive compute + cooling)

Production Capability

Infinite (for software)

<100K units/year?

<10K units/year?

Tariffs

None

High (China)

High (90% China)

Accuracy

NODAR is orders of magnitude more accurate than any other commercial stereo vision camera.

Competitor A: 50-mm baseline, 90-deg FOV, 1280 pixels (H)

Competitor B: 100-mm baseline, 90-deg FOV, 1280 pixels (H)

NODAR NDR-HDK-2.0-50-65: 500-mm baseline, 65-deg FOV, 2880 pixels (H)

NODAR NDR-HDK-2.0-50-135: 500-mm baseline, 135-deg FOV, 2880 pixels (H)

NODAR NDR-HDK-2.0-100-30: 1000-mm baseline, 30-deg FOV, 2880 pixels (H)

NODAR NDR-HDK-2.0-100-65: 1000-mm baseline, 65-deg FOV, 2880 pixels (H)

System Requirements

Camera support

Tested between 1MP and 8MP, RGB, LWIR, global and rolling shutter

Lenses

Tested for 5 - 180 degree FOV

compute hardware

NVIDIA GPU (for example, RTX 4090, Geforce 3080, Jetson Orin)

Processor

ARM or x86¹

Specifications

Calibration

Continuous (every frame)

Baseline

Tested up to 3m

Field of view

Tested between 5 and 180 degrees

API

ZMQ and ROS2

Precision

0.2 pixel in disparity

Camera alignment tolerance

+/-3°

Stereo matching algorithm

Deterministic signal processing algorithm

Vibration tolerance

10 pixels per frame

1. Software-only technology enables porting to Intel+GPU or other processors

Competitive Analysis

How does NODAR compare to other stereo vision options?

Options that work with generic cameras (not pre-built stereo vision boards with fixed baseline, low-performance global shutters, low resolution, or low-quality optics). There are some free options, but remeber that you get what you pay for!

NODAR SDK

NVIDIA Isaac ROS/ ESS DNN

open cv

License

Commercial

Commercial

Open Source

Primary Use

AV, ADAS, Trucks, Rail, Tractors, Airplanes

Robotics & Warehouse Automation

DIY, Prototyping, Research

Max Detecction Range

1,000m+

Short to Mid (0.5m – 50m)

Baseline Dependent (Limited)

Frame-byFrame Auto Calibration

Yes

Adjusts every frame to fix vibration/shocks

No

Relies on rigid mounts; DNN is robust to some error but doesn't "re-calibrate"

No

Requires manual chessboard or offline calibration

Hardware Agnostic?

Yes (Off-the-shelf cameras)

Partially (Optimized for Jetson/NVIDIA)

Yes

Supported Computers

CPU: ARM or x86 GPU: Nvidia

Nvidia Jetson

Mainly x86 support

Core Algorithm

Wide-baseline Geometry

Deep Learning (ESS DNN)

SGBM

Accuracy

Excellent

Good

Fair

Computational Speed

Fastest

Middle

Slowest

ROS Support

Yes

Yes

No, not natively

ZMQ Support

Yes

No

No

Vibratation Tolerance

Extreme. Supports unlinked, non-rigid mounts.

Low. Expects a rigid bridge or factory-sealed unit.

None. Any shift breaks the epipolar geometry.

Accuracy

NODAR is orders of magnitude more accurate than any other commercial stereo vision camera.

Competitor A: 50-mm baseline, 90-deg FOV, 1280 pixels (H)

Competitor B: 100-mm baseline, 90-deg FOV, 1280 pixels (H)

NODAR NDR-HDK-2.0-50-65: 500-mm baseline, 65-deg FOV, 2880 pixels (H)

NODAR NDR-HDK-2.0-50-135: 500-mm baseline, 135-deg FOV, 2880 pixels (H)

NODAR NDR-HDK-2.0-100-30: 1000-mm baseline, 30-deg FOV, 2880 pixels (H)

NODAR NDR-HDK-2.0-100-65: 1000-mm baseline, 65-deg FOV, 2880 pixels (H)

Speed

NODAR Hammerhead can process 160M points/s, which is 4-100x faster than other options.

NODAR SDK

NVIDIA Isaac ROS/ ESS DNN

open cv

Performance @ 0.55MP (576x960)

400 FPS (Orin AGX)

109 FPS (Orin AGX)

4.2 FPS (Intel Xeon 2.2 GHz)

Performance @ 1MP

160 FPS (Orin AGX)

Not supported

2.0 FPS (Intel Xeon 2.2 GHz)

Performance @ 5.4MP

30 FPS (Orin AGX)

Not supported

0.3 FPS (Intel Xeon 2.2 GHz)

Performance @ 8MP

20 FPS (Orin AGX)

Not supported

0.2 FPS (Intel Xeon 2.2 GHz)

Includes benchmarks for OpenCV computations.

Price

Replace your LiDAR with NODAR and cut sensor costs by 80%. Current LiDAR ($500–$15,000) makes mass-market margins impossible. At $1,000 per unit, autonomy is a luxury experiment. At $150, it’s a global standard.

NODAR SDK

Automotive LiDAR (Long-Range)

High-End LiDAR (Robotaxi)

Hardware Cost

Low

($40 for 2x 8MP cameras, 100K qty)

Moderate

($500–$1,000)

High

($5,000–$15,000+)

Software/Licensing

Per-Unit (license fee)

Included with hardware

Included with hardware

Unit Cost (Qty 1M)

$190

$600–$1,200

$10,000+

Data Density

~100 Million points/sec

~1–2 Million points/sec

~2–5 Million points/sec

Maintenance

Low (no moving parts)

Moderate (solid-state is better)

High (mechanical spin wears out)

Integration Cost

Moderate (requires calibration/GPU)

High (calibration + power draw)

Extreme (massive compute + cooling)

Production Capability

Infinite (for software)

<100K units/year?

<10K units/year?

Tariffs

None

High (China)

High (90% China)

Frequently Asked Questions

How is NODAR different?

NODAR’s Hammerhead technology enables unparalleled detection of small objects at large distances by supporting large-baseline mounts (0.5-3m or larger) with independently mounted (no connecting bar), high-resolution cameras. This is accomplished with patented algorithms that automatically calibrate in real-time to compensate for misalignments that arise from larger baselines.

Does Hammerhead work in low visibility?

With recent improvements in camera sensors, Hammerhead is effective in low-light conditions, such as city streets or headlight-illuminated scenes at night. We have also tested well against LiDAR in dusty, rainy, and foggy conditions, and Hammerhead technology can also be adapted to work with infrared or thermal cameras.

What is included in Hammerhead’s HDK 2.0?

The HDK includes both hardware and software to evaluate and integrate with Hammerhead. The hardware is comprised of an Electronic Control Unit (ECU), 2 cameras, and all necessary connection cables. The software includes applications for demoing Hammerhead, collecting data, calibrating the initial camera setup, and an integration API.

How long does the HDK installation take?

The HDK is designed to work immediately out of the box. You would only need to connect a power source and a display to see Hammerhead technology in action.

How does calibration work?

Stereo cameras are known to lose alignment due to vehicle vibration, temperature fluctuations, and long-term, small component movements. Using unique patented technology, Hammerhead automatically calibrates on every video frame to compensate for these alignment variations.

What mounting configurations are supported for HDK 2.0?

The entire HDK camera assembly can be mounted to a vehicle or structure via tripod mounting holes. Even though the cameras in the HDK are mounted at a fixed baseline, production versions of Hammerhead can be shipped with custom baselines and mounts depending on specific application requirements.

What is the HDK's camera resolution?

As shipped with the HDK, a 5-megapixel resolution is supported. If desired, additional resolutions can also be supported with different camera hardware.

What computing platforms are supported by the SDK?

The SDK is provided as prebuilt .deb packages for the following configurations:

Ubuntu 20.04

CUDA 11.4 (AMD64, ARM64)

CUDA 12.0 (AMD64)

Ubuntu 22.04

CUDA 12.1, 12.2, 12.3, 12.6, 13.0 (AMD64)

CUDA 12.2, 12.6 (ARM64)Ubuntu 24.04

CUDA 12.9, 13.0 (AMD64)

Typical performance (5.4 MP images)

Jetson Orin AGX: ~5–10 fps

NVIDIA RTX A5500 (Laptop): ~15–20 fps

NVIDIA GeForce RTX 4090 (Desktop): ~20–25 fps

Performance varies based on configuration, including image resolution (1–8 MP), bit depth (8-bit vs. 16-bit), and whether optional modules such as GridDetect are enabled.

As a general guideline:

Modern laptops typically achieve ~15–20 fps

Desktop systems with high-end GPUs typically achieve ~20–30 fps

What are the minimum recommended compute specs for the SDK?

We currently require an NVIDIA GPU. Our binaries rely on Cuda and target Ubuntu 20.04, 22.04, and 24.04 for ARM and AMD64 (Intel and AMD Cpus). For a complete list of supported systems, please visit https://docs.nodarsensor.net/index.html#supported-systems

Does the SDK output classify specific objects, like a car, or the occupied grid coordinates and depth?

The SDK provides occupied grid coordinates and depth. If you want classes of objects (car, motorcycle, etc), our customers have had success with applying YOLO to the rectified images to obtain classes of objects.

For an Autonomous Mobile Robot platform, should I choose a 360° stereo camera configuration or a single high-precision LiDAR?

If a platform already provides 360° coverage using cameras, adding additional cameras to form stereo pairs is often an effective approach. By pairing low-cost cameras (on the order of ~$40 per camera in volume), the system can generate high-precision stereo point clouds without introducing a dedicated LiDAR sensor.

How is lens focus mechanically locked when installing lenses onto the camera?

For S-mount lenses, we mechanically secure by tacking the lens threads into the camera body using superglue. We then use a 3M DP420NS Black epoxy to seal the lens-to-camera interface and provide a watertight seal. A detailed assembly procedure is documented here: https://nodarsensor.notion.site/focusing-and-gluing-an-s-mount-lens-to-a-lucid-triton-camera

What is Hammerhead's maximum supported distance?

Hammerhead can detect objects at large distances. With the 16mm (30° field of view) lens option, humans are clearly detectable at 500m. Even with the wider 7mm (65° field-of-view) lens option, humans are clearly detectable at 200m.

How is software integration done for HDK 2.0?

The HDK ships with ROS2 and C++ APIs, along with thorough documentation at https://github.com/nodarhub. Other integration options can be provided as a custom effort.

What type of support is available?

The HDK includes access to a support portal and a dedicated support email address. We aim to reply to critical issues within 24 hours. The SDK includes 12 months of software updates and full developer documentation. For premium support options, contact support@nodarsensor.com.

Why was a 5.4 MP camera selected for the HDK, instead of higher-resolution alternatives (e.g., 4096 × 1200)?

Automotive-grade cameras with GigE interface are currently available up to 5.4 MP from Lucid Vision. Higher-resolution Lucid cameras are not HDR, which is a key requirement for outdoor autonomy use cases. The SDK supports resolutions of 8 MP and higher; typically limited by GPU memory.

How is the HDK’s camera assembly acceptance-tested given the manual assembly steps (lens installation, focus locking, sealing)?

The Acceptance Test Procedure (ATP) serves as the final quality check to verify that each assembled unit meets specifications. Lucid cameras are IP67-rated when M12 and M8 connectors are properly sealed. To address the lens interface as a potential ingress point, we use IP67- or IP69-rated S-mount lenses. We seal the lens-to-housing interface with watertight epoxy.The epoxy is applied externally and is visually inspected during ATP. While the camera assembly is IP67-rated, it is not hermetically sealed; limited air exchange may occur through the M12 and M8 connectors. As the HDK is a reference design, additional ATPs may be required for production deployments.

Can I input stereo data we’ve collected using other hardware?

The SDK provides occupied grid coordinates and depth. For object classes (car, motorcycle, etc), our customers have had success obtaining those by applying YOLO to the rectified images.

Which computing platforms are supported by HDK 2.0?

The HDK includes an NVIDIA Orin processing unit. Hammerhead can also be ported to other processors as a custom project.

What is included in NODAR’s SDK?

The SDK includes all the software needed to evaluate and integrate with Hammerhead. The software includes applications for demoing Hammerhead, collecting data, calibrating the initial camera setup, and C++ and Python APIs. The NODAR Viewer is provided for depth and point-cloud visualization. Our GridDetect occupancy map software is available as an add-on option.

Why should I include GridDetect with the SDK?

GridDetect is a high-performance, GPU-accelerated implementation of a deterministic particle filter algorithm for occupancy grid creation. It converts dense 3D point-cloud data into a robust, real-time understanding of free space and obstacles.

In practical terms, GridDetect adds three key capabilities to the SDK:

High-throughput performance

Processes up to 100 million 3D points per second, enabling real-time operation with dense, long-range stereo data.Robust ground removal

Effectively separates ground from obstacles, supporting detection of objects as small as a 15 cm brick at 150 m on a highway, as well as subtle features like emerging crops on uneven agricultural terrain.Terrain-aware reasoning

Correctly handles slopes, hills, and ramps without misclassifying them as obstacles.

Together, these capabilities allow developers to move beyond raw depth data and achieve stable, long-range obstacle detection suitable for automotive, agricultural, and industrial autonomy applications.

What cameras models are supported by the SDK?

The NODAR SDK is camera-agnostic. We have tested rolling shutter and global shutter RGB cameras, LWIR cameras, and resolutions from 1MP to 8MP.

While any camera is compatible, optimal performance is achieved with synchronized cameras that have overlapping fields of view and provide uncompressed images. The system supports native resolutions up to 8MP, but this is subject to available GPU memory; higher resolutions will require downsampling.

Why was a rolling-shutter sensor selected instead of a global-shutter sensor for stereo vision?

Most commercially available cameras offering very high dynamic range (typically 120–140 dB HDR) use rolling-shutter sensors. High dynamic range is critical for outdoor operation, including performance in shadows, direct sun, dusk, dawn, and other challenging lighting conditions. The SDK supports both rolling-shutter and global-shutter cameras, allowing integrators to select the sensor type that best matches their system requirements.

Is motion blur typically observed with the 5.4 MP rolling-shutter Lucid Vision cameras (e.g., TRI054S-CC) under Hammerhead’s expected operating conditions?

Motion blur is generally not a problem for Hammerhead stereo matching, as blur is typically similar in both cameras and therefore remains matchable.

Blur is most commonly observed when cameras are mounted close to the ground at higher vehicle speeds, or during low-light operation when longer exposure times are required. In these cases, reducing exposure while increasing camera gain, can mitigate blur.

The rolling shutter effect is distinct from motion blur and is a distortion that occurs in cameras, causing warping and skewing of fast-moving objects, such as bent propellers and slanted buildings. For autonomous systems, this effect is typically negligible because the rolling shutter distortion of mechanically scanning LiDAR is orders of magnitude larger.

How is NODAR different?

NODAR’s Hammerhead technology enables unparalleled detection of small objects at large distances by supporting large-baseline mounts (0.5-3m or larger) with independently mounted (no connecting bar), high-resolution cameras. This is accomplished with patented algorithms that automatically calibrate in real-time to compensate for misalignments that arise from larger baselines.

Does Hammerhead work in low visibility?

With recent improvements in camera sensors, Hammerhead is effective in low-light conditions, such as city streets or headlight-illuminated scenes at night. We have also tested well against LiDAR in dusty, rainy, and foggy conditions, and Hammerhead technology can also be adapted to work with infrared or thermal cameras.

What is included in Hammerhead’s HDK 2.0?

The HDK includes both hardware and software to evaluate and integrate with Hammerhead. The hardware is comprised of an Electronic Control Unit (ECU), 2 cameras, and all necessary connection cables. The software includes applications for demoing Hammerhead, collecting data, calibrating the initial camera setup, and an integration API.

How long does the HDK installation take?

The HDK is designed to work immediately out of the box. You would only need to connect a power source and a display to see Hammerhead technology in action.

How does calibration work?

Stereo cameras are known to lose alignment due to vehicle vibration, temperature fluctuations, and long-term, small component movements. Using unique patented technology, Hammerhead automatically calibrates on every video frame to compensate for these alignment variations.

What mounting configurations are supported for HDK 2.0?

The entire HDK camera assembly can be mounted to a vehicle or structure via tripod mounting holes. Even though the cameras in the HDK are mounted at a fixed baseline, production versions of Hammerhead can be shipped with custom baselines and mounts depending on specific application requirements.

What is the HDK's camera resolution?

As shipped with the HDK, a 5-megapixel resolution is supported. If desired, additional resolutions can also be supported with different camera hardware.

What computing platforms are supported by the SDK?

The SDK is provided as prebuilt .deb packages for the following configurations:

Ubuntu 20.04

CUDA 11.4 (AMD64, ARM64)

CUDA 12.0 (AMD64)

Ubuntu 22.04

CUDA 12.1, 12.2, 12.3, 12.6, 13.0 (AMD64)

CUDA 12.2, 12.6 (ARM64)Ubuntu 24.04

CUDA 12.9, 13.0 (AMD64)

Typical performance (5.4 MP images)

Jetson Orin AGX: ~5–10 fps

NVIDIA RTX A5500 (Laptop): ~15–20 fps

NVIDIA GeForce RTX 4090 (Desktop): ~20–25 fps

Performance varies based on configuration, including image resolution (1–8 MP), bit depth (8-bit vs. 16-bit), and whether optional modules such as GridDetect are enabled.

As a general guideline:

Modern laptops typically achieve ~15–20 fps

Desktop systems with high-end GPUs typically achieve ~20–30 fps

What are the minimum recommended compute specs for the SDK?

We currently require an NVIDIA GPU. Our binaries rely on Cuda and target Ubuntu 20.04, 22.04, and 24.04 for ARM and AMD64 (Intel and AMD Cpus). For a complete list of supported systems, please visit https://docs.nodarsensor.net/index.html#supported-systems

Does the SDK output classify specific objects, like a car, or the occupied grid coordinates and depth?

The SDK provides occupied grid coordinates and depth. If you want classes of objects (car, motorcycle, etc), our customers have had success with applying YOLO to the rectified images to obtain classes of objects.

For an Autonomous Mobile Robot platform, should I choose a 360° stereo camera configuration or a single high-precision LiDAR?

If a platform already provides 360° coverage using cameras, adding additional cameras to form stereo pairs is often an effective approach. By pairing low-cost cameras (on the order of ~$40 per camera in volume), the system can generate high-precision stereo point clouds without introducing a dedicated LiDAR sensor.

How is lens focus mechanically locked when installing lenses onto the camera?

For S-mount lenses, we mechanically secure by tacking the lens threads into the camera body using superglue. We then use a 3M DP420NS Black epoxy to seal the lens-to-camera interface and provide a watertight seal. A detailed assembly procedure is documented here: https://nodarsensor.notion.site/focusing-and-gluing-an-s-mount-lens-to-a-lucid-triton-camera

What is Hammerhead's maximum supported distance?

Hammerhead can detect objects at large distances. With the 16mm (30° field of view) lens option, humans are clearly detectable at 500m. Even with the wider 7mm (65° field-of-view) lens option, humans are clearly detectable at 200m.

How is software integration done for HDK 2.0?

The HDK ships with ROS2 and C++ APIs, along with thorough documentation at https://github.com/nodarhub. Other integration options can be provided as a custom effort.

What type of support is available?

The HDK includes access to a support portal and a dedicated support email address. We aim to reply to critical issues within 24 hours. The SDK includes 12 months of software updates and full developer documentation. For premium support options, contact support@nodarsensor.com.

Why was a 5.4 MP camera selected for the HDK, instead of higher-resolution alternatives (e.g., 4096 × 1200)?

Automotive-grade cameras with GigE interface are currently available up to 5.4 MP from Lucid Vision. Higher-resolution Lucid cameras are not HDR, which is a key requirement for outdoor autonomy use cases. The SDK supports resolutions of 8 MP and higher; typically limited by GPU memory.

How is the HDK’s camera assembly acceptance-tested given the manual assembly steps (lens installation, focus locking, sealing)?

The Acceptance Test Procedure (ATP) serves as the final quality check to verify that each assembled unit meets specifications. Lucid cameras are IP67-rated when M12 and M8 connectors are properly sealed. To address the lens interface as a potential ingress point, we use IP67- or IP69-rated S-mount lenses. We seal the lens-to-housing interface with watertight epoxy.The epoxy is applied externally and is visually inspected during ATP. While the camera assembly is IP67-rated, it is not hermetically sealed; limited air exchange may occur through the M12 and M8 connectors. As the HDK is a reference design, additional ATPs may be required for production deployments.

Can I input stereo data we’ve collected using other hardware?

The SDK provides occupied grid coordinates and depth. For object classes (car, motorcycle, etc), our customers have had success obtaining those by applying YOLO to the rectified images.

Which computing platforms are supported by HDK 2.0?

The HDK includes an NVIDIA Orin processing unit. Hammerhead can also be ported to other processors as a custom project.

What is included in NODAR’s SDK?

The SDK includes all the software needed to evaluate and integrate with Hammerhead. The software includes applications for demoing Hammerhead, collecting data, calibrating the initial camera setup, and C++ and Python APIs. The NODAR Viewer is provided for depth and point-cloud visualization. Our GridDetect occupancy map software is available as an add-on option.

Why should I include GridDetect with the SDK?

GridDetect is a high-performance, GPU-accelerated implementation of a deterministic particle filter algorithm for occupancy grid creation. It converts dense 3D point-cloud data into a robust, real-time understanding of free space and obstacles.

In practical terms, GridDetect adds three key capabilities to the SDK:

High-throughput performance

Processes up to 100 million 3D points per second, enabling real-time operation with dense, long-range stereo data.Robust ground removal

Effectively separates ground from obstacles, supporting detection of objects as small as a 15 cm brick at 150 m on a highway, as well as subtle features like emerging crops on uneven agricultural terrain.Terrain-aware reasoning

Correctly handles slopes, hills, and ramps without misclassifying them as obstacles.

Together, these capabilities allow developers to move beyond raw depth data and achieve stable, long-range obstacle detection suitable for automotive, agricultural, and industrial autonomy applications.

What cameras models are supported by the SDK?

The NODAR SDK is camera-agnostic. We have tested rolling shutter and global shutter RGB cameras, LWIR cameras, and resolutions from 1MP to 8MP.

While any camera is compatible, optimal performance is achieved with synchronized cameras that have overlapping fields of view and provide uncompressed images. The system supports native resolutions up to 8MP, but this is subject to available GPU memory; higher resolutions will require downsampling.

Why was a rolling-shutter sensor selected instead of a global-shutter sensor for stereo vision?

Most commercially available cameras offering very high dynamic range (typically 120–140 dB HDR) use rolling-shutter sensors. High dynamic range is critical for outdoor operation, including performance in shadows, direct sun, dusk, dawn, and other challenging lighting conditions. The SDK supports both rolling-shutter and global-shutter cameras, allowing integrators to select the sensor type that best matches their system requirements.

Is motion blur typically observed with the 5.4 MP rolling-shutter Lucid Vision cameras (e.g., TRI054S-CC) under Hammerhead’s expected operating conditions?

Motion blur is generally not a problem for Hammerhead stereo matching, as blur is typically similar in both cameras and therefore remains matchable.

Blur is most commonly observed when cameras are mounted close to the ground at higher vehicle speeds, or during low-light operation when longer exposure times are required. In these cases, reducing exposure while increasing camera gain, can mitigate blur.

The rolling shutter effect is distinct from motion blur and is a distortion that occurs in cameras, causing warping and skewing of fast-moving objects, such as bent propellers and slanted buildings. For autonomous systems, this effect is typically negligible because the rolling shutter distortion of mechanically scanning LiDAR is orders of magnitude larger.

How is NODAR different?

NODAR’s Hammerhead technology enables unparalleled detection of small objects at large distances by supporting large-baseline mounts (0.5-3m or larger) with independently mounted (no connecting bar), high-resolution cameras. This is accomplished with patented algorithms that automatically calibrate in real-time to compensate for misalignments that arise from larger baselines.

Does Hammerhead work in low visibility?

With recent improvements in camera sensors, Hammerhead is effective in low-light conditions, such as city streets or headlight-illuminated scenes at night. We have also tested well against LiDAR in dusty, rainy, and foggy conditions, and Hammerhead technology can also be adapted to work with infrared or thermal cameras.

What is included in Hammerhead’s HDK 2.0?

The HDK includes both hardware and software to evaluate and integrate with Hammerhead. The hardware is comprised of an Electronic Control Unit (ECU), 2 cameras, and all necessary connection cables. The software includes applications for demoing Hammerhead, collecting data, calibrating the initial camera setup, and an integration API.

How long does the HDK installation take?

The HDK is designed to work immediately out of the box. You would only need to connect a power source and a display to see Hammerhead technology in action.

How does calibration work?

Stereo cameras are known to lose alignment due to vehicle vibration, temperature fluctuations, and long-term, small component movements. Using unique patented technology, Hammerhead automatically calibrates on every video frame to compensate for these alignment variations.

What mounting configurations are supported for HDK 2.0?

The entire HDK camera assembly can be mounted to a vehicle or structure via tripod mounting holes. Even though the cameras in the HDK are mounted at a fixed baseline, production versions of Hammerhead can be shipped with custom baselines and mounts depending on specific application requirements.

What is the HDK's camera resolution?

As shipped with the HDK, a 5-megapixel resolution is supported. If desired, additional resolutions can also be supported with different camera hardware.

What computing platforms are supported by the SDK?

The SDK is provided as prebuilt .deb packages for the following configurations:

Ubuntu 20.04

CUDA 11.4 (AMD64, ARM64)

CUDA 12.0 (AMD64)

Ubuntu 22.04

CUDA 12.1, 12.2, 12.3, 12.6, 13.0 (AMD64)

CUDA 12.2, 12.6 (ARM64)Ubuntu 24.04

CUDA 12.9, 13.0 (AMD64)

Typical performance (5.4 MP images)

Jetson Orin AGX: ~5–10 fps

NVIDIA RTX A5500 (Laptop): ~15–20 fps

NVIDIA GeForce RTX 4090 (Desktop): ~20–25 fps

Performance varies based on configuration, including image resolution (1–8 MP), bit depth (8-bit vs. 16-bit), and whether optional modules such as GridDetect are enabled.

As a general guideline:

Modern laptops typically achieve ~15–20 fps

Desktop systems with high-end GPUs typically achieve ~20–30 fps

What are the minimum recommended compute specs for the SDK?

We currently require an NVIDIA GPU. Our binaries rely on Cuda and target Ubuntu 20.04, 22.04, and 24.04 for ARM and AMD64 (Intel and AMD Cpus). For a complete list of supported systems, please visit https://docs.nodarsensor.net/index.html#supported-systems

Does the SDK output classify specific objects, like a car, or the occupied grid coordinates and depth?

The SDK provides occupied grid coordinates and depth. If you want classes of objects (car, motorcycle, etc), our customers have had success with applying YOLO to the rectified images to obtain classes of objects.

For an Autonomous Mobile Robot platform, should I choose a 360° stereo camera configuration or a single high-precision LiDAR?

If a platform already provides 360° coverage using cameras, adding additional cameras to form stereo pairs is often an effective approach. By pairing low-cost cameras (on the order of ~$40 per camera in volume), the system can generate high-precision stereo point clouds without introducing a dedicated LiDAR sensor.

How is lens focus mechanically locked when installing lenses onto the camera?

For S-mount lenses, we mechanically secure by tacking the lens threads into the camera body using superglue. We then use a 3M DP420NS Black epoxy to seal the lens-to-camera interface and provide a watertight seal. A detailed assembly procedure is documented here: https://nodarsensor.notion.site/focusing-and-gluing-an-s-mount-lens-to-a-lucid-triton-camera

What is Hammerhead's maximum supported distance?

Hammerhead can detect objects at large distances. With the 16mm (30° field of view) lens option, humans are clearly detectable at 500m. Even with the wider 7mm (65° field-of-view) lens option, humans are clearly detectable at 200m.

How is software integration done for HDK 2.0?

The HDK ships with ROS2 and C++ APIs, along with thorough documentation at https://github.com/nodarhub. Other integration options can be provided as a custom effort.

What type of support is available?

The HDK includes access to a support portal and a dedicated support email address. We aim to reply to critical issues within 24 hours. The SDK includes 12 months of software updates and full developer documentation. For premium support options, contact support@nodarsensor.com.

Why was a 5.4 MP camera selected for the HDK, instead of higher-resolution alternatives (e.g., 4096 × 1200)?

Automotive-grade cameras with GigE interface are currently available up to 5.4 MP from Lucid Vision. Higher-resolution Lucid cameras are not HDR, which is a key requirement for outdoor autonomy use cases. The SDK supports resolutions of 8 MP and higher; typically limited by GPU memory.

How is the HDK’s camera assembly acceptance-tested given the manual assembly steps (lens installation, focus locking, sealing)?

The Acceptance Test Procedure (ATP) serves as the final quality check to verify that each assembled unit meets specifications. Lucid cameras are IP67-rated when M12 and M8 connectors are properly sealed. To address the lens interface as a potential ingress point, we use IP67- or IP69-rated S-mount lenses. We seal the lens-to-housing interface with watertight epoxy.The epoxy is applied externally and is visually inspected during ATP. While the camera assembly is IP67-rated, it is not hermetically sealed; limited air exchange may occur through the M12 and M8 connectors. As the HDK is a reference design, additional ATPs may be required for production deployments.

Can I input stereo data we’ve collected using other hardware?

The SDK provides occupied grid coordinates and depth. For object classes (car, motorcycle, etc), our customers have had success obtaining those by applying YOLO to the rectified images.

Which computing platforms are supported by HDK 2.0?

The HDK includes an NVIDIA Orin processing unit. Hammerhead can also be ported to other processors as a custom project.

What is included in NODAR’s SDK?

The SDK includes all the software needed to evaluate and integrate with Hammerhead. The software includes applications for demoing Hammerhead, collecting data, calibrating the initial camera setup, and C++ and Python APIs. The NODAR Viewer is provided for depth and point-cloud visualization. Our GridDetect occupancy map software is available as an add-on option.

Why should I include GridDetect with the SDK?

GridDetect is a high-performance, GPU-accelerated implementation of a deterministic particle filter algorithm for occupancy grid creation. It converts dense 3D point-cloud data into a robust, real-time understanding of free space and obstacles.

In practical terms, GridDetect adds three key capabilities to the SDK:

High-throughput performance

Processes up to 100 million 3D points per second, enabling real-time operation with dense, long-range stereo data.Robust ground removal

Effectively separates ground from obstacles, supporting detection of objects as small as a 15 cm brick at 150 m on a highway, as well as subtle features like emerging crops on uneven agricultural terrain.Terrain-aware reasoning

Correctly handles slopes, hills, and ramps without misclassifying them as obstacles.

Together, these capabilities allow developers to move beyond raw depth data and achieve stable, long-range obstacle detection suitable for automotive, agricultural, and industrial autonomy applications.

What cameras models are supported by the SDK?

The NODAR SDK is camera-agnostic. We have tested rolling shutter and global shutter RGB cameras, LWIR cameras, and resolutions from 1MP to 8MP.

While any camera is compatible, optimal performance is achieved with synchronized cameras that have overlapping fields of view and provide uncompressed images. The system supports native resolutions up to 8MP, but this is subject to available GPU memory; higher resolutions will require downsampling.

Why was a rolling-shutter sensor selected instead of a global-shutter sensor for stereo vision?

Most commercially available cameras offering very high dynamic range (typically 120–140 dB HDR) use rolling-shutter sensors. High dynamic range is critical for outdoor operation, including performance in shadows, direct sun, dusk, dawn, and other challenging lighting conditions. The SDK supports both rolling-shutter and global-shutter cameras, allowing integrators to select the sensor type that best matches their system requirements.

Is motion blur typically observed with the 5.4 MP rolling-shutter Lucid Vision cameras (e.g., TRI054S-CC) under Hammerhead’s expected operating conditions?

Motion blur is generally not a problem for Hammerhead stereo matching, as blur is typically similar in both cameras and therefore remains matchable.

Blur is most commonly observed when cameras are mounted close to the ground at higher vehicle speeds, or during low-light operation when longer exposure times are required. In these cases, reducing exposure while increasing camera gain, can mitigate blur.

The rolling shutter effect is distinct from motion blur and is a distortion that occurs in cameras, causing warping and skewing of fast-moving objects, such as bent propellers and slanted buildings. For autonomous systems, this effect is typically negligible because the rolling shutter distortion of mechanically scanning LiDAR is orders of magnitude larger.

Feature title.

This is a feature description spanning a couple of lines.

Feature title.

This is a feature description spanning a couple of lines.